About Neon

The Neon Console is a Single Page Application (SPA), or rather, a collection of SPAs. Static assets like JavaScript and CSS are served through CloudFront, while the HTML is dynamically rendered by our Go backend.

In this post, we’ll dive into our journey of migrating from webpack to Vite, the challenges we faced, and what we gained along the way.

Our Webpack Setup

Previously, we used a single Webpack configuration file to build all our apps. Unfortunately, build times were slow, and we lacked hot module replacement support, forcing developers to refresh the page after every change.

Spoiler

Webpack built all static resources (JS and CSS) and an assets-manifest.json file using the webpack-assets-manifest plugin. When rendering HTML, our Go backend used this manifest file to serve the correct static resources for each entry point. In the local environment, Webpack watched for file changes, rebuilt the assets, and regenerated the manifest file. On every page load, the Go backend read the manifest and returned updated HTML.

In addition to static HTML, our Go backend injects user properties required for the app to render. This eliminates the need for an additional HTTP request when rendering the app in the browser.

- Build time (with Webpack): 20 seconds

- Incremental build time: ~1.5 seconds

Vite on The Horizon

We’ve all heard that Vite is the go-to tool for speed and simplicity. While we might have been able to achieve similar results with Webpack, we decided to give Vite a try.

Our migration goals were:

- Support hot module replacement (HMR)

- Improve build times

- Simplify the setup by reducing the number of dependencies

- Unify tooling around a single core (we were already using Vitest for unit testing and Vite-based Storybook)

We started by migrating our admin application and, once confident with the setup, migrated the rest of the apps in a single change. All users were transitioned to the new Vite-based build in the same deployment—reflecting our confidence that everything would work as expected.

Migration Challenges

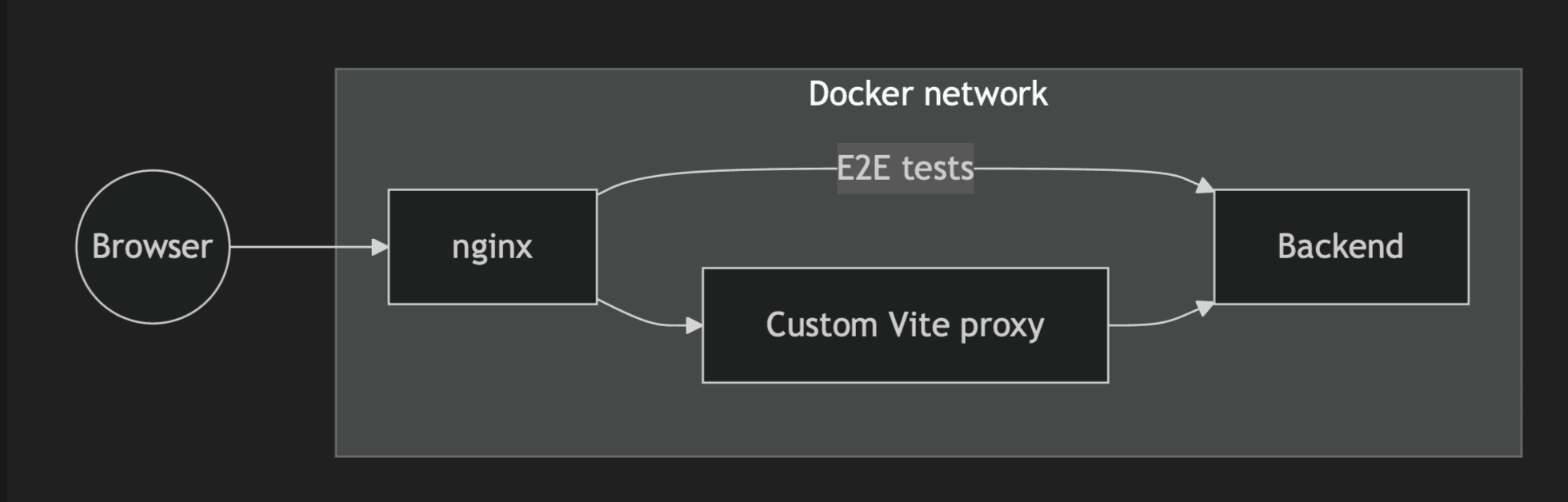

Unfortunately, we couldn’t simply use a Vite dev server because HTML generation is handled by the backend. This required us to build a custom proxy using the Vite server.

Fortunately, Vite offers a convenient JS API that is straightforward to use when more control is needed. Additionally, its documentation includes a helpful guide on integrating Vite into existing backend applications.

Here’s how it works locally:

- All entry points are listed in

vite.config.jsto build JS bundles. We don’t use HTML entry points (for most apps). - Production assets are built and included in the backend Docker. On startup, the backend reads the

manifest.jsonfile generated by Vite and imports all assets while generating HTML. - Our custom Vite proxy reads the HTML response and replaces production assets with Vite dev imports.

- Vite transforms the HTML and injects development scripts.

- Developers interact with an HMR-enabled app.

- End-to-end (E2E) tests bypass the proxy entirely, allowing us to test production bundles without being slowed down by Vite.

Excluding comments, our custom proxy is only 150 lines of code and uses the http-proxy dependency—the same one Vite uses internally.

- Build time (with Vite): 22 seconds

Surprisingly, our build times didn’t improve and even became slightly slower. This caught us off guard, but the HMR functionality and reduction in dependencies were still significant wins.

info

Surprises That We Hit Along the Way

- We tried to implement a proxy ourselves using

fetch. This worked for basic scenarios but failed in others. Switching tohttp-proxymade all flows work. - Our backend might return multiple SPA apps in a single HTML. The custom Vite proxy needed to support that as well.

- Finding the entry point for a production asset was a challenge. The backend responds with production assets, so the custom Vite proxy needed to locate the assets in the HTML and replace them with a single dev import referencing the correct entry point.

- We used HTML comments to mark the assets.

- We have both frontend and backend developers working with the same dev setup. We wanted our change to be as unnoticeable as possible.

- This meant running the custom Vite proxy in a container behind Nginx.

- Supporting E2E headless tests

- The Vite dev setup loads every file in a separate request. For our main app, the browser needs to load ~1500 files, which makes cold (empty cache) page loads slow.

- For E2E tests, we introduced a second way to run our services locally: we skip the proxy altogether. Nginx forwards requests directly to the backend, and production assets are served and tested.

- We had to rename one entry point because it conflicted with an API endpoint. The entry point JS file had the same name as the API endpoint, and Vite couldn’t handle it.

- The

@vitejs/plugin-react-swcextension panicked on syntax errors, forcing developers to restart the proxy. We switched back to@vitejs/plugin-reactdue to its better stability and error handling. The babel-based plugin was already a huge win for us, especially with HMR. - We encountered

Unable to preload CSS for /assets/assets/index-I-pmUzqQ.csserrors in production. It seems we are not the only ones experiencing this. - We wanted to use Content Security Policy (CSP) headers in the local environment as well. The custom Vite proxy extends CSP to support Vite’s WebSocket server.

- Global

process.env.*variables are not defined by default. - No incremental builds

- Developers who skip the custom Vite proxy won’t see any UI changes until they rebuild the app completely.

Final Outcomes

- Build times stayed the same.

- HMR works great

- The Vite configuration is significantly simpler, with fewer plugins and dependencies

- A custom Vite proxy had to be implemented

- Frontend developers are happy 🙂

- Configuration is now unified across apps, Vitest, and Storybook

- Vite build artifacts are split into more modules, whereas Webpack produced a single JS and CSS import per app

- Initial page loads in the local environment take slightly longer, as the browser must fetch thousands of files—but we now refresh much less frequently

Follow-Up Work

Not having faster build times was a huge disappointment for all of us. After analyzing the flamegraphs that Vite produces, we found we were hitting Rollup limits. We are looking forward to the future of Vite powered by Rolldown!

However, we noticed that our Ace Editor dependency could be patched to reduce the number of modules Vite needs to process. Using the yarn patch utility, we modified the library to depend on ~450 fewer modules. This change alone sped up our build times by 10 seconds (~50%)!